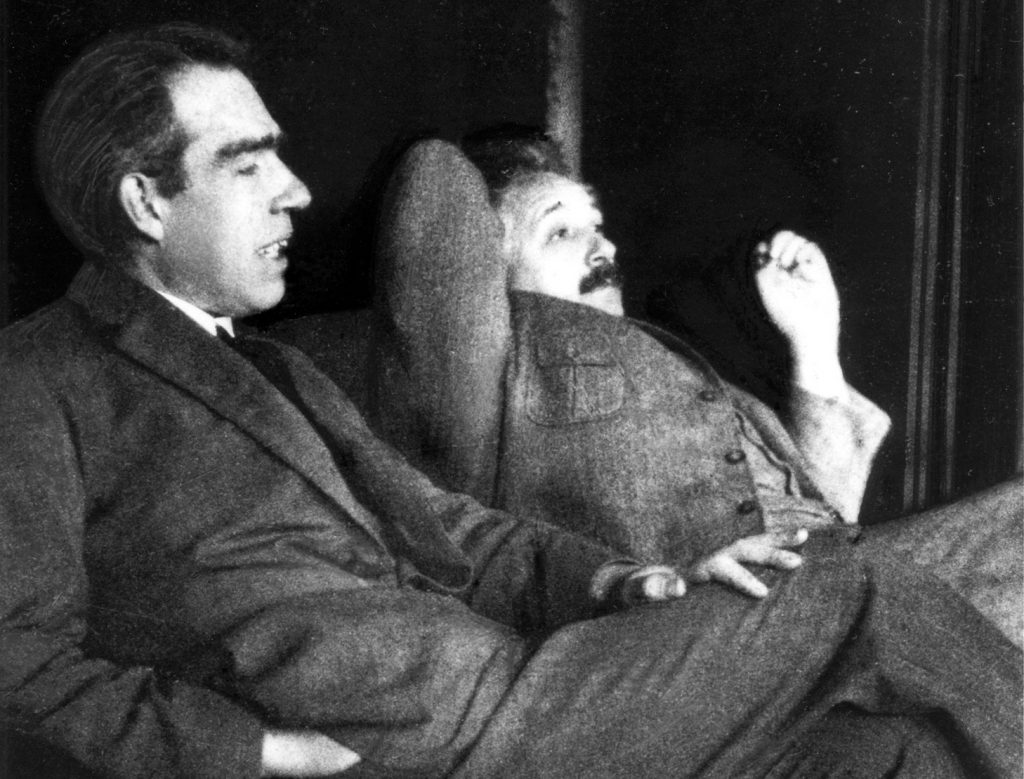

Browsing citation databases is a boring, but sometimes revealing exercise. For example, if you search Google Scholar or another database for the name ‘Albert Einstein’, you might be surprised to discover that his most cited paper (about 20 times more hits than each of his three 1905-papers, or any paper on general relativity) is the 1935 Physical Review article universally known as the “Einstein-Podolsky-Rosen paradox”, in short EPR, and titled Can quantum-mechanical description of physical reality be considered complete? This paper received no more than 100 citations until about 1970, then it started to gain interest: by the year 2000 it had cumulated about 2000 citations, then more than 10,000 in the next ten years, 6,000 more in the next five, and close to another 6,000 in the past five years to date. All this because of the challenge posed by another paper, whose annual celebration occurred just this past Friday. November 4 is celebrated every year as the “John Bell Day” at Queen’s University in Belfast, and in many other universities around the world. It is the day of 1964 when John Stewart Bell, then working at CERN on theoretical particle physics and accelerator design, mailed to a little-known and soon-to-disappear journal his work, On the Einstein-Podoslky-Rosen paradox [Phys. Phys. Fiz. 1 (1964) 193]. As trained physicists, we are all aware of the ideal conflict raised around the early 1930s, with the famous Copenhagen interpretation of quantum mechanics (QM) popularized by Bohr as “the end of physics”. In short, the idea that the wavefunction is all the physics, and the mathematical formalism that allows to manipulate it reflects all the possible experimental measurements of all the possible physical observables. As well as, we all remember Einstein’s profound dislike of the idea. Bell’s paper was an attempt in support of Einstein’s point of view on the question, by purporting the introduction of ‘hidden variables’ as the natural way to complete the probabilistic worldview of QM, to restore classical determinism and locality of interactions. Besides, Bell was ultimately motivated by what could be called an ideological standpoint. As he reported to science writer Jeremy Bernstein: “I felt that Einstein’s intellectual superiority over Bohr, in this instance, was enormous; a vast gulf, between the man who saw clearly what was needed, and the obscurantist.” Paradox on the paradox, Bell was to show that Einstein was wrong on this question, the opposite of what he intended to prove.

Anyway, the strange title of the short-lived Physics Physique Физика journal caught the attention of future Nobelist John Clauser, who discovered Bell’s paper in there, and began to consider how to perform a Bell test experiment. Something he and Stuart Freedman eventually achieved around 1972, with a first result that appeared to prove Bohr right, and Einstein wrong. However, some other results obtained by others groups in the following years were not so clearcut. With the technology of the time, it was difficult to perform experiments on correlated pairs of photons with low enough noise, and to arrange an experimental set up that could adhere to the highly idealized gedankenexperiment of Bell. Obtaining a precise measurement of Bell’s inequalities was a cumbersome and very indirect task. So, in 1974 a young professor at the Institut d’Optique in Paris, Christian Imbert, handed out that obscure paper to his new PhD student Alain Aspect, suggesting him to see if there was an experiment that could be performed to test it. They both were dubious about the whole thing, hence Aspect goes to CERN to talk directly to John Bell. To his dismal, Bell tells him that he sees his work on the foundations of quantum mechanics as nothing more than a funny hobby, and asks him whether he has a permanent position (implied: allowing him to waste his time on such a silly subject). Aspect replied yes, to which Bell apparently was surprised, given the very young age of his visitor. Like Bell, Aspect comes home with the profound conviction that Einstein could not be wrong on such a question, and sets out to create the experiment that will give it a definitive answer. Leveraging on the technological innovations of lasers, Aspect, with P. Grangier, J. Dalibard and G. Roger, builds a calcium-jet source powered by two krypton lasers that could reduce the signal-to-noise ratio by thousands, compared to previous experiments. Bell’s inequality predicts that the correlation between the polarizations of the two entangled photons emitted by the Ca atom, and travelling in opposite directions, should be bounded to a maximum value. QM, on the other hand, predicts that the inequality is violated by the measurement of just one of the polarizations, which leads to the problem of non-locality, since the measurement of the second distant photon is determined at once by the measurement of the first one. By 1982 Aspect’s results were published, and proved the agreement with the QM prediction to a precision of 40 sigma.

Similar to the EPR 1935 paper, Bell’s paper received just a couple of hundred citations for about 40 years, until the beginning of the 2000s, while in the past few years the count jumps to tens of thousands. No doubt that this work started an intense revival about the foundations of QM, but such an abstruse subject, rather reserved to epistemologists, science historians and philosophers, would not justify its immense popularity of today. What makes Bell’s work so interesting is its relevance for the latest developments of quantum technologies. Maybe out of serendipity, Bell laid the stones for the road to quantum information and quantum computing. His inequality can be recast in such a way that the maximum violation predicted for two perfectly correlated quantum states is equal to 2·sqrt(2). These are today designated as ‘Bell states’ for pairs of qubits, from which a maximally entangled two-state base can be constructed, the ‘Bell basis’ of their 4-dimensional Hilbert space. While measuring a single qubit gives an indeterminate result, if we measure the second one we will get exactly the same value in modulus, with a perfect correlation. Bell allowed to show that such quantum correlations are indeed stronger than any classical measurement, which can be interpreted as QM allowing information processing beyond the limits of classical computing. Indeed, the declaratory of the Physics 2022 Nobel Prize reads: “Alain Aspect, John Clauser and Anton Zeilinger have each conducted groundbreaking experiments using entangled quantum states, where two particles behave like a single unit even when they are separated. Their results have cleared the way for new technology based upon quantum information.”

However, the science historian Tim Maudlin points out a subtlety (or a mistake?) in the Nobel Committee statement, where it appears to imply that the demonstration of violations of Bell inequalities rule out the hidden variables revision of QM. To be precise, Bell’s analysis indicates that we must give up searching for a local theory of physics, be it QM or some other theory. Bell died unexpectedly of a stroke in 1990, otherwise he could have clearly been sharing this year’s Nobel, and could have defended a more precise description. He was a supporter of the ‘pilot wave’ theory of David Bohm, and to formulate his statements, in the 1964 paper and the subsequent one [Rev. Mod. Phys. 38 (1966) 447 – actually the first to be written, but published later], he had used exactly a hidden-variable model, pointing to the fact that it is locality that fails when correlations are larger than classically expected. According to his 1966 paper, a non-local hidden variable theory should not have a problem with violating the inequalities. How such a theory would pass Ockham’s razor is debatable, but by now is confined to speculations. Anton Zeilinger, maybe the most ‘modern’ of the three Nobel recipients, seems to have nailed the coffin also on non-local realistic theories with some of his experiments [Nature 446 (2007) 871]. With D. Greenberger and M. Horne, he extended the proof to states involving three or more quantum particles [the GHZ theorem, Am. J. Phys. 58, 1131–1143 (1990)], which opened the way to his subsequent experiments on hyperdense quantum coding (more than 2 bits per pair of entangled particles), quantum cryptography, and quantum teleportation, for which he performed revolutionary experiments by transporting entangled photons over distances of hundreds of km, or in open-air, underwater conditions, and even between Earth and a satellite. A feat now attempted also by other groups, which is dubbed as the first step towards a global scale quantum internet. However, some of the most influential work by Zeilinger reaches at the very heart of the notion of objective reality.

Niels Bohr once said: “It is wrong to think that the task of physics is to find out how Nature is. Physics concerns what we can say about Nature”. If we pay attention to this subtle difference, some of the most mysterious aspects of Nature seem to make a lot more sense. Is physics really trying to find ‘the mathematical laws that govern the universe’? Well, an electron does not have to solve the Schrödinger equation to do what it does. Our laws of Nature are just models. So, maybe the job of physics is limited to coming up with rules (‘laws’) that make verifiable predictions about the universe. But some of the founders of quantum theory were convinced that the role of physics was even one step below: what we actually model is merely the result of our observations, not the universe itself. In other words, the mathematical laws of physics do not govern reality (Galileo). They are not even models of reality (Newton). Rather, they are models of our experience of the reality. In other words, physics models our information about the world. Zeilinger proposed an ‘informational’ approach to QM, in which the universe is no longer broken into its physical components, but into information bits [Found. Phys. 29 (1999) 631]. But what does it mean to use (quantum) bits of information as building blocks of the universe? Information represents our knowledge: ultimately, it is the result of a series of experiments. So, our new building block becomes a statement about the information we measure, for example the location, the speed, or the mass of a particle.

And how do we build up a new universe made only of information? If each building block is an answer to a question (an experiment), the smallest unit should be a question with the smallest possible number of outcomes. Ideally, a yes/no question: that is, a quantum bit. Any other complex question, according to this view, can be reduced to a sequence of well-chosen binary questions. Surprisingly, under this approach much of the weirdness of QM suddenly makes sense: indeterminacy, entanglement, non-locality, all turn out to be just the expected behavior of such an information system. In a Stern-Gerlach experiment, we ask whether the spin of a particle is parallel or antiparallel to a given direction of measurement. If we prepare a beam with all spins “up”, we will get a precise answer by a magnet oriented parallel to the spin projection: once all the particles go “up”, we used the 1 bit of information loaded in the particles at the beginning. But now, turn the magnet by 90 degrees and ask the same beam whether the spin is left or right. Half of the particles will go left and half will go right: they are in a superposition of both states, and what we see is that measurement ‘collapses’ their final state randomly. The information of their 1/0 bit was already used, and any other question has an undetermined answer. Moreover, if now we pass again each beam, left and right, in a second magnet oriented as the original one, we will not see as before all the particles to go “up”. Each of the two beams will split into half up and half down: the initial information is lost after the first measurement. By thinking of quantum systems as quantum bits, we see that indeterminacy arises naturally.

Entanglement also fits in this picture. Let’s look at just two particles, e.g. as in Aspect’s experiments. This time we prepare one particle with the spin “up” with respect to the spin of the second particle, which has its spin “down”. Since a spin contains only one bit of information, now this information has been used to indicate its relationship with the other particle. Clearly, this information is no longer isolated in one particle, but is spread between the two. The sum of the information of the two particles makes for two bits, but each bit is distributed between the two, non-locally. When we interrogate one of the particles about its spin, we automatically learn also the spin of the other, even if the two particles are separated by a distance. The information has now become local to each particle, in a collapse process apparently instantaneous. But what we actually did was to use half of the information in preparing the state of one particle, so it should not be surprising that after measuring the other half of information available, we are left with zero uncertainty: we have used all the information available. The classical measure of information is Shannon’s entropy, which is practically equivalent to the Von Neumann entropy of ‘quantum thermodynamics’. In this framework, a special quantitative relation can be derived: the entropic uncertainty, which could shed light on the most mysterious and shocking feature of QM, the wave-particle duality. Coles et al. [Nature Comm. 5 (2014) 5814] applied the notion of entropic uncertainty to analyze an experiment in which a photon can behave like a particle or a wave, depending on the optical path taken: it can take either one of two paths (particle), or both (wave), but one possibility excludes the other. Here, the wave-particle duality arises because it is impossible to answer two questions asked at the same time: are you a particle? or, are you a wave?

Eventually, this year’s Nobel seems to say that all we could ever know about the universe is how we interact with it: quantum information may be both the limit, and the door to all wonders. (On a side, I note that Clauser has an h-index of 29, Aspect has 70, Zeilinger 140: does it mean something to you?)